AI assisted Competency Models

See how quickly you can draft high-quality competency statements with AI assisted Competency Models and human-in-the-loop validation — then publish to your library, map to roles, and assess with evidence.

What it is and why it matters

Our AI assistant helps with the first draft of a competency or competency model: definitions, competency names, subheadings if any, indicators, and proficiency levels across Core, Leadership, Management, Technical, and Clinical competencies. You keep control: reviewers validate wording, set levels, and approve publication. Result: faster coverage, consistent structure, and a clear audit trail — without sacrificing quality.

Key Capabilities

- Ask for suggestions tailored to industry, location, organization size/type

- Draft by job role and by competency type (Core/Leadership/Technical/Clinical)

- Generate observable indicators (as many as needed)

- Indicators use a proficiency framework (Novice → Expert) or keep it simple

- Save drafts to your central competency library and, if applicable – draft mapped role profile for review.

- Add assessment methods and evidence standards

How it works

- Select competency category/job family for library storage

- Provide context: role purpose, key tasks, risk profile, region, and compliance requirement notes.

- Provide sources: role descriptions, SOPs, training catalogs, quality/clinical policies.

- Generate drafts: competency, subheadings if any, definition + specified number (4–5 ) indicators per level using your selected level scheme – if any

- Select only the relevant suggestions

- Ensure indicators are observable/measurable and level-appropriate.

- Review role mapping and save selection as drafts to role profile, or just to competency library

- Validate: Subject Matter Expert review and update & sign-off (clinical/technical); stakeholder review for Core/Leadership.

- Publish – once used in assessment version is locked – prior versions retained for history and audit.

- Operationalize: run assessments, capture evidence, schedule re-validation.

Addressing the duplication problem

Generative AI is a language model – based on words and their analyzed frequency and position relative to one another. As such when you use it as a suggestion engine for multiple roles it will come up with many close variants. While our prompt and search engine will assess and show match to existing material the human in the loop will need to review the drafts.

- Run a keyword search and duplicate check in the library after generating your competency list

- Reuse existing competencies where possible

- Add only variations that are specific to particular roles and or equipment use

- You can attach new suggested indicators to existing competencies

- Create a new competency only when the skill and standards are significantly different.

Validation & Governance

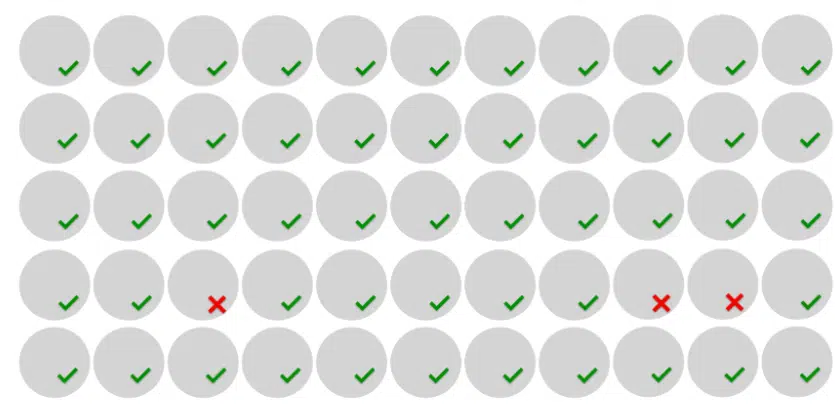

AI makes mistakes so you must have an effective process for managing it.

- Human-in-the-loop is mandatory — Subject Matter Experts approve every item prior to publish.

- Bias control: check for subjective adjectives, one behavior per indicator/standard

- Regulatory standards – include the source in the text and check it’s accurate.

- Versioning & audit: capture owner, date, on each publication status change. Auto update status to In Use when used in assessment.

- Access & security: use permissions to manage library editing and publication rights by competency type, category, job family, organizational unit.

With a continuously changing environment competencies evolve over time and with feedback after assessment rounds. Our library and competency management process is designed to facilitate updates

How effective is AI - where it helps and where to be cautious

☑️ Strengths: speed, consistency, and coverage across many roles; accelerates first drafts and reduces admin.

⚠️ Cautions: niche or emerging technologies may need more Subject Matter Expert input, generic suggestions must be adapted to your equipment, SOPs, and risk profile.

Always validate indicators, levels, and evidence requirements before publishing to your library.

Try it now

FAQs

What is AI-assisted competency modelling?

It’s a workflow where AI drafts competency definitions, indicators, and levels from your approved context (roles, SOPs, policies), then Subject Matter Experts (SMEs) select from suggestions, review, edit if needed, and approve before anything is published.

Will AI replace our SMEs?

No. AI speeds up first drafts; SMEs stay the decision-makers for wording, level calibration, evidence standards, and sign-off.

How accurate are the suggestions?

Accuracy depends on input quality and human review. Provide clear role context and sources; use our competency statement guidelines to keep indicators observable and level-appropriate.

What inputs should we provide?

Role purpose, key responsibilities and tasks, risk profile, regional/compliance notes, plus relevant SOPs/policies and training catalog excerpts. No confidential personal data.

How do you prevent duplicates across roles?

Competency are suggested per category/job family – we encourage library reuse. Use the library search to check against AI suggestions. Create new items only when the skill or standard is materially different, and assign to appropriate proficiency level – if using levels.

How are proficiency levels handled?

Proficiency Levels indicate differences in task scope, independence, risk, and complexity. Requests should be for no more than 4–5 indicators per level. Then review to ensure alignment with your proficiency level definitions.

How do we validate and audit changes?

Use human-in-the-loop sign-off. Publication status should indicate whether statements are editable or locked after use in assessments. When the item was last review or edited and by whom.

Is this suitable for regulated (clinical/technical) content?

Yes—SME validation is mandatory. Link competencies and indicators to the actual standard/policy and keep certifications separate in role requirements while referencing them.

Where do suggestions come from and is our data private?

Suggestions are generated from patterns in language models plus your approved inputs. Models do not share your information but do not include personal information. Your competency content is stored with per-client database isolation and activity logging.

How quickly can we go live?

Taking into account the gathering of required background/context information, typical pilots publish 3 roles in 30 days, expand to job families by 60–90 days, then map to roles and assessments.

Does it integrate with assessments and learning?

Yes—publish to the competency library, map via competency mapping, then assess in Assessment & Analytics with evidence standards. You can reference learning resources for competency gaps separately.

Does it support multiple languages?

Yes the competency modules are multilingual – there is a designated foundation language so that reporting is standardized. Users select their preferred language. You are responsible for the translations.