Competency Assessment - fair, evidence-based evaluation

Competency assessment is essential to evaluating real skills in context—but bias and subjectivity often undermine its effectiveness. Learn how to implement competency assessment that is structured, fair, and grounded in evidence.

What Is Competency Assessment?

Competency assessment is the structured evaluation of how well an individual demonstrates job-relevant skills and behaviors in practice.

Unlike qualifications or training—which infer potential—competency, assessment focuses on actual performance in context. It asks:

Can this person apply the right skill, at the right level, in their specific role?

It’s a cornerstone of modern people management, used for:

- Workforce capability diagnostics

- Targeted development planning

- Career and succession decisions

- Performance reviews with real substance

Why Competency Assessment Is Difficult to Get Right

The challenge? Competency is often invisible unless we define it and observe it intentionally. Without a structured approach, assessments tend to be:

- Vague (“seems capable”)

- Inconsistent (varies by manager)

- Unfair (influenced by personality or perception)

- Unusable (no actionable data)

That’s why we need a systematic assessment framework—and vigilance about bias.

What Competency Assessment Is NOT

To clarify, competency assessment is not:

❌ A personality test

❌ A list of completed courses

❌ A performance rating based on output alone

❌ A subjective “gut feel”

❌ A proxy for seniority or time in role

It is a deliberate process of evaluating how someone performs specific behaviors that reflect key competencies, in the context of their job.

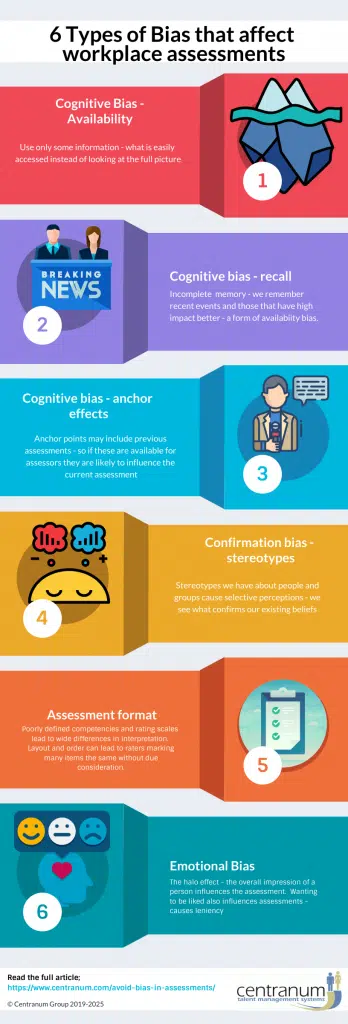

Bias in Competency Assessments

Bias is the #1 risk to valid, credible assessments. It distorts results, undermines trust, and leads to poor talent decisions.

Here’s how to recognize and reduce bias throughout the competency assessment process:

Avoiding Bias in Competency Assessment

Define the Competency Clearly

Every assessment must start with well-defined competencies, using clear descriptions of expected behavior relevant to the role.

Example:

Instead of “Good communication,” define as :

“Explains technical issues clearly to non-technical stakeholders; adapts tone and detail to audience.”

Why this matters: Ambiguity invites interpretation—and interpretation opens the door to bias.

Ground Assessments in Behavior and Context

Make assessments about what the person did, not how they are perceived. Require examples or observable evidence.

Good: “Presented the new QA checklist to the team and answered implementation questions with clarity.”

Poor: “Seems confident when talking.”

This ensures assessments are evidence-based, not personality-based.

Use a Structured Assessment Method

Use a consistent method for all assessments:

- Self-assessment

- Manager review

- Peer/360 feedback

- Evidence submissions

- Optional tests or scenarios

Structure and consistency reduce randomness and make assessments easier to compare and trust.

Distinguish Assessment from Feedback

Assessments must judge behavior against a standard, not how the behavior made someone feel.

Assessment: “Demonstrates the ability to train others effectively on process changes.”

Feedback: “I appreciate how you explained the new process.”

Use feedback to support development, not to define performance.

Build in Calibration Mechanisms - especially for performance assessment

Don’t rely on a single manager’s opinion. Instead:

- Use auto scoring or suggested rating schemes

- Set up cross-checks- moderation (e.g. HR, second manager)

- Use analytics to flag rater patterns

- Require justification for high/low ratings

- Conduct rater training

Systems like Centranum offer rating distribution visualizations and bias alerts to help identify and correct patterns early.

Best Practices for Competency Assessment

| Practice | Benefit |

|---|---|

| Use real job scenarios | Ensures relevance and validity |

| Require evidence or examples | Reduces subjectivity |

| Limit the number of competencies per role | Increases focus and clarity |

| Combine self- and manager assessment | Balances perspectives |

| Enable regular reassessment | Captures growth and change |

Centranum’s Approach to Competency Assessment

Centranum enables structured, fair, and role-relevant assessment with:

- Clear competency definitions embedded in the system

- Configurable workflows for self, manager, and multi-rater input

- Evidence capture via journals, logs, or attachments

- Built-in prompts for justification and rating calibration

- Dashboards to highlight trends, gaps, and outliers

- Linkages to development planning and performance tracking

➡️ Explore the Competency Assessment & Analytics Module

Supporting Modules for Competency Assessment

Performance Review Module

Competency assessments can be integrated with structured evaluations using the Performance Review Module. This ensures alignment with job expectations and provides a formal mechanism for capturing competency ratings in a consistent format.

Journal Module (Evidence Log)

Supporting documentation is essential for credible assessments. The Journal Module allows individuals and managers to log real-time performance evidence, which can then be linked to specific competencies or indicators.

360 Feedback Module

To balance perspectives and reduce rater bias, the 360 Feedback Module enables structured input from peers, managers, and others. This supports a more well-rounded and objective assessment process.

FAQs

How is competency assessment different from performance review?

Performance reviews often focus on goals and outcomes. Competency assessment focuses on the behaviors and skills used to get there. Performance reviews are often used for decisions on advancement and compensation. This affects the accuracy of evaluation which are influenced by this purpose.

While values based behaviours are often included in performance appraisal – the knowledge and skills that are role specific are evaluated as competency assessment which has a developmental focus. This purpose prompts evaluations that are more accurate and helpful.

Can we assess competencies without using ratings?

Yes. You can use narrative examples, but then you cannot easily use analytics to track competency gaps, levels and growth over time.

Is self-assessment reliable?

It’s a useful input, especially when paired with well defined competency standards – it should always be validated by a manager or expert assessor.

How often should competency assessment be done?

Ideally, at least annually—or whenever roles, responsibilities, or team needs change.

Can we start without proficiency levels?

Yes, but assessments will be much more subjective. Competency criteria following a simple 3-level proficiency scheme improves consistency dramatically.