AI & Decision Quality in Talent Systems

Artificial intelligence is now embedded across talent technology. From drafting competency models to screening applicants and analysing feedback, AI is increasingly part of how organisations make decisions about people.

The critical question is not whether AI is present. It is how it is governed.

AI can improve speed, consistency, and administrative efficiency. But decisions affecting hiring, promotion, development, and succession still depend on clearly defined standards, structured measurement, and human accountability.

Key Definitions

Automation – Rule-based execution of predefined logic.

Machine Learning – Pattern detection based on historical data.

Large Language Model (LLM) – A probabilistic system that predicts likely next words based on training data.

Decision Boundary – The defined point at which human judgement determines sufficiency.

Governance – Controls ensuring criteria, measurement, and approvals remain explicit and reviewable.

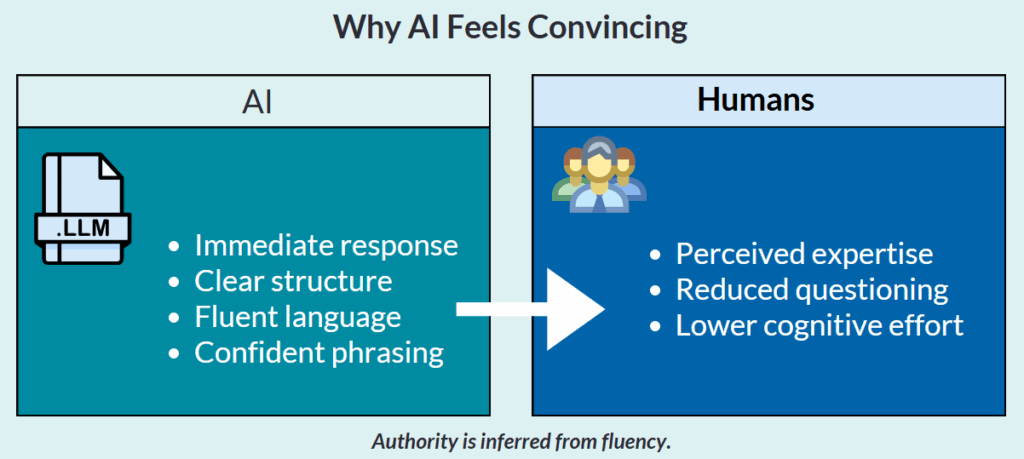

Why AI Feels Authoritative

AI systems:

- Respond fluently

- Mirror user language

- Provide confident summaries

- Appear neutral

Psychological mechanisms reinforce trust:

- Attribution of intentionality

- Authority bias

- Confirmation bias

- Reinforcement through agreeable tone

The issue is not that users attribute sentience. It is that AI is perceived as objective.

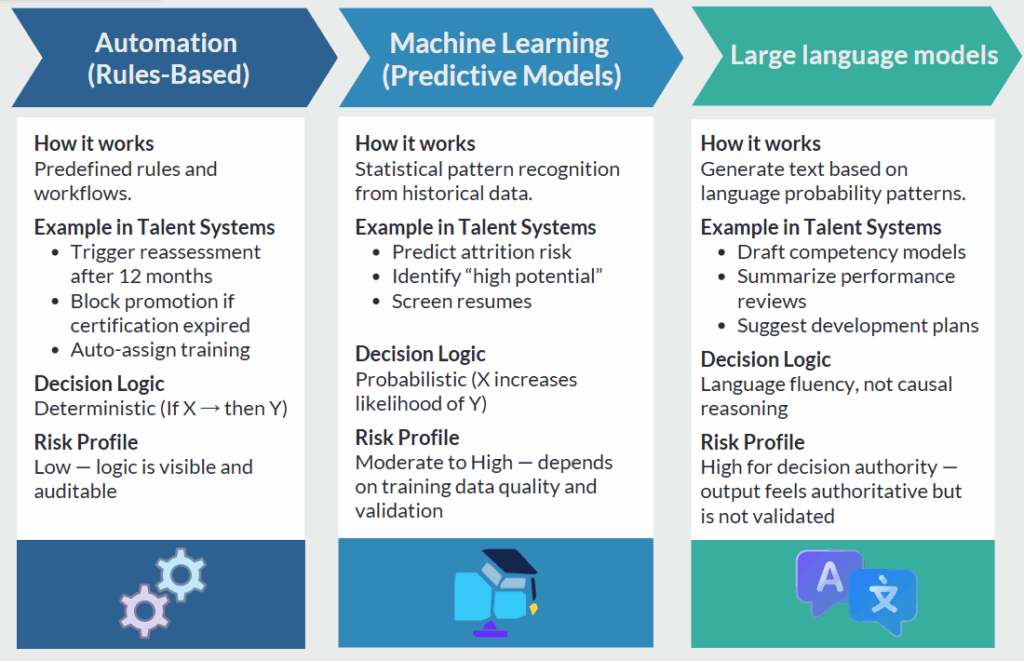

What “AI” Means in Talent Systems

“AI” is used to describe very different capabilities. In practice, most systems fall into three categories.

Automation (rules and workflows)

Automation applies predefined rules. Examples include:

Assigning learning based on role

Triggering reassessment reminders

Routing approvals based on role or entity

Enforcing required steps before sign-off

Automation follows rules. It does not infer.

Machine learning (pattern-based models)

Machine learning models use statistical optimization techniques to detect patterns and estimate probabilities from historical data. They are designed to improve predictive accuracy, not to determine causation or intent.

Examples include:

- Predicting attrition risk based on previous patterns

- Identifying correlations in engagement or performance data

- Classifying items based on prior labelled examples

Large language models (LLMs)

Systems that generate text by predicting likely word sequences based on training data.

They are used for

- drafting competencies

- summarizing feedback and conversations

- generating reports, and suggesting development actions.

- LLMs do not reason about truth. They generate probabilistic outputs based on patterns in language.

Understanding these distinctions matters when evaluating claims.

Understanding these distinctions matters when evaluating claims on system AI capabilities.

What AI Actually Does in Talent Systems

AI typically performs one or more of the following:

- Drafting competency models

- Summarizing performance feedback

- Clustering survey responses

- Recommending development actions

- Ranking or screening applicants

- Highlighting potential risk patterns

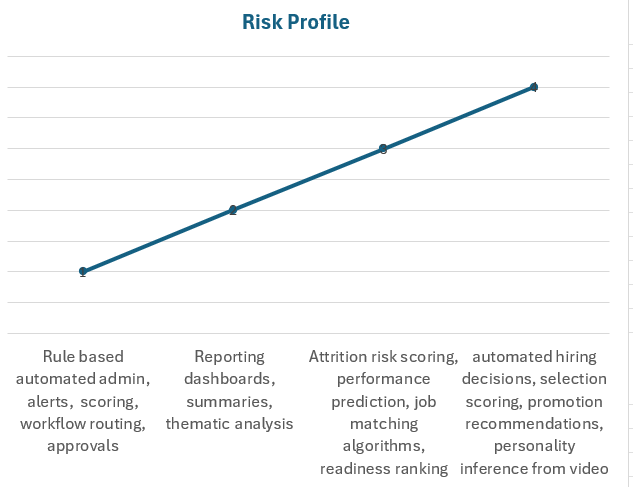

Where AI Adds Value

AI performs well in:

- Drafting structured content for human review

- Summarizing large volumes of qualitative data

- Pattern detection in workforce analytics

- Automating administrative workflows

- Highlighting exceptions or anomalies

In these areas, AI enhances productivity and consistency.

Where Decision Risk Increases

Risk increases when AI influences decisions that determine:

- Hiring or promotion

- Capability readiness

- Performance classification

- Succession eligibility

- Compensation adjustments

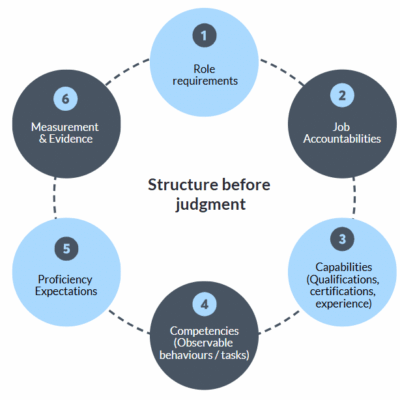

In these cases, AI output must operate within a defined decision architecture:

- Observable role criteria

- Explicit proficiency expectations

- Structured measurement methods

- Clear evidence requirements

- Defined approval pathways

- Audit history and version control

Without this structure, AI output seems authoritative but may lack validity.

(See how measurement and evidence design affect decision quality.)

AI and Merit-Based Decisions

AI does not determine merit. Merit-based decisions depend on:

- Defined standards

- Transparent rules

- Documented rationale

- AI reflects existing data and structure.

- Consistent application

AI reflects the structure it operates within.

When standards are explicit and measurement is structured, AI can support consistency. When they are not, AI may amplify ambiguity. AI cannot improve fairness without clearly defined decision criteria in talent systems.

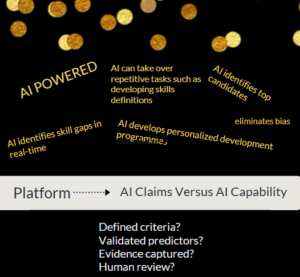

AI Washing and Vendor Claims

AI washing” refers to inflated or ambiguous claims about artificial intelligence capability. In talent systems, the label “AI-powered” may obscure whether decisions are governed by defined criteria, structured measurement, and human approval pathways. Questions to consider:

- What decision does the AI influence?

- Is the decision boundary defined?

- Are criteria explicit?

- Is scoring logic transparent?

- Can outputs be reconstructed and reviewed?

- Is human sign-off required?

AI that operates without visible structure introduces governance risk. Technology labels are less important than decision transparency.

Human Judgement Still Matters

AI can assist with pattern recognition and summarisation. It cannot replace:

- Contextual understanding

- Ethical reasoning

- Interpretation of ambiguous situations

- Accountability for high-stakes decisions

The most effective systems maintain humans “in the loop” — particularly at defined decision boundaries.

Practical AI & Decision Quality Checklist

Before deploying AI in talent decisions, organisations should confirm:

⬜️ Observable Role criteria are defined before AI is applied

⬜️ Proficiency expectations are explicit

⬜️ Measurement methods are structured

⬜️ Evidence requirements are documented

⬜️ AI outputs are reviewable

⬜️ Human approval points are clear

⬜️ Reporting allows analysis by individual, team, and entity

⬜️ Decision history can be reconstructed

These safeguards enable AI to support — rather than distort — decision quality.

Use a structured approach to evaluate capability and competency management systems before accepting AI claims.

Related Decision Quality Resources

Meritocracy and Fair Talent Decisions

Defining Decision Criteria in Talent Systems

Measurement and Evidence in Talent Systems

Mitigating Bias in assessment processes

Evaluating Capability & Competency Management Systems

FAQs

Is AI reliable for hiring and promotion decisions?

AI can support structured analysis, but reliability depends on the validity of criteria, the quality of training data, and the transparency of the model. AI predictions are probabilistic and should not replace defined standards and human oversight.

What is the difference between automation and AI in talent systems?

Automation follows predefined rules. Machine learning identifies statistical patterns. Large language models generate text based on probability. These technologies differ significantly in risk and decision impact.

Can AI eliminate bias in talent decisions?

AI can replicate or amplify existing bias if training data reflects historical imbalance. Bias mitigation requires defined criteria, balanced data, transparency, and governance.

What is AI washing in HR technology?

AI washing refers to inflated or vague claims that software is “AI-powered” without clear explanation of the underlying model, validation, or decision boundaries.

Should AI make autonomous talent decisions?

High-stakes decisions such as hiring, promotion, or termination should not be fully automated. AI is best used as decision support within defined governance structures.

What questions should organisations ask vendors about AI?

Organisations should ask:

- What model type is used?

- What data was used for training?

- How is validity demonstrated?

- How is bias monitored?

- What role does human oversight play?

Does AI improve merit-based decision making?

AI can support merit-based decisions only when clear decision criteria, structured verification methods, and defined evidence requirements are already in place.

Without defined standards, AI output may appear authoritative and precise but may not be valid. When treated as objective without verification, such output can distort judgement and adversely impact hiring, promotion, development, and succession decisions.