Measurement & Evidence in Talent Systems

Verification, Validity, and Defensibility

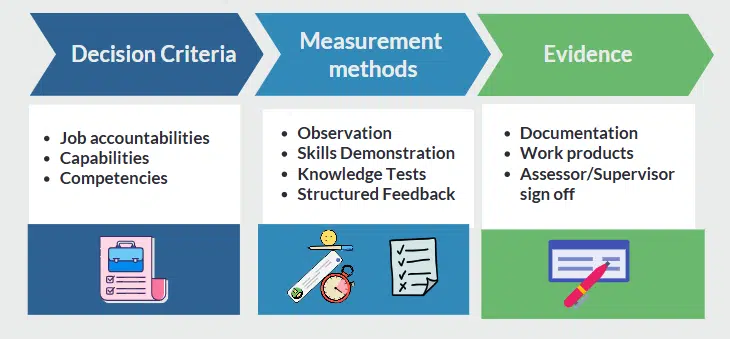

- Defining decision criteria establishes what is required.

- Measurement determines whether those requirements have been met.

Measurement and criteria are distinct components of a defensible talent system.

Key Definitions

Measurement Foundations

Measurement – The method used to assess achievement against defined criteria.

Evidence – Verifiable information demonstrating that a requirement has been met.

Evidence Requirement – The defined proof needed to confirm achievement.

Reliability – Consistency of results when assessment is repeated under similar conditions.

Validity – The extent to which measurement reflects the intended requirement.

Measurement comes after Criteria Definition

Criteria define observable standards. Measurement determines the extent to which those standards are achieved.

A rating scale, test score, observation form, or narrative summary does not define the requirement. It assesses performance against it.

When criteria and measurement are conflated:

- Ratings become proxies for judgement

- Evidence is inconsistently interpreted

- Decisions cannot be reconstructed reliably

Measurement must follow defined, observable criteria. For example, consider the defined criterion:

Produces a monthly report summarizing interactions with key account customers, reconciled to CRM records, and documenting agreed follow-up actions.

Measurement does not redefine the standard. It verifies whether the defined elements are present. Verification may involve:

- Confirming the report was produced within the required period

- Checking reconciliation against CRM records

- Confirming follow-up actions are documented

The measurement mechanism may be:

- Supervisor review

- Structured checklist

- Random audit

- System-based validation

The criterion defines the requirement. The measurement verifies compliance with that requirement.

What Constitutes Measurement?

Measurement mechanisms in talent systems may include:

- Rating scales

- Structured observation

- Knowledge assessments

- Practical demonstrations

- Documentation Review

- Documented case examples

- Output or performance metrics

Each mechanism produces data. The data must be evaluated against defined standards.

Rating Scales Are Instruments, Not Standards

Rating scales record the extent to which defined standards are achieved. They do not replace the standards themselves. Rating scales function as recording mechanisms. Their design influences consistency and defensibility.

Effective scale design requires:

- Clear point labels so that the meaning of each rating is consistent across assessors.

- Defined achievement thresholds

- Application to defined criteria rather than to broad descriptions.

Where stakes are high, additional descriptive guidance on the scale points may be required to reduce interpretive variation. (Detailed considerations regarding scale structure are addressed separately.)

Evidence Requirements

Measurement becomes defensible only when linked to defined evidence. Evidence may include:

- Direct observation records

- Supervisor validation

- Peer verification

- Test results

- Documented outputs

- Records of completion of defined tasks

- Regulatory certifications

Evidence requirements should be explicit. For example:

- Number of observed demonstrations required

- Minimum test score

- Verified independent completion of defined tasks

- Time-based recertification intervals

Undefined evidence expectations introduce inconsistency.

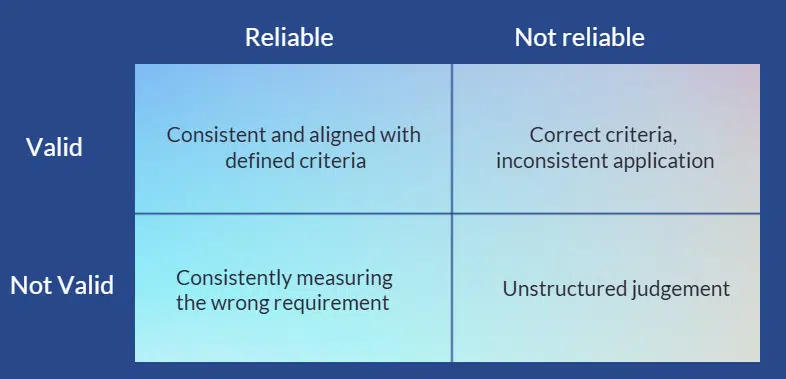

Reliability and Consistency

Measurement quality depends on two distinct concepts.

Reliability refers to consistency.

If an assessment is repeated under the same conditions, it should produce the same result.

Clear scale definitions and structured calibration improve reliability.

Validity refers to accuracy of inference.

The measurement must reflect the defined, observable criteria it is intended to assess.

A system may be reliable but not valid.

Consistent ratings are not sufficient if the underlying criteria are poorly defined, open to different interpretations.

Defensible talent decisions require both.

Historical Data Review

Defensible systems allow review of:

- What criteria were in effect

- What measurement instrument was used

- What evidence was provided

- Who approved the outcome

- When the assessment occurred

Without this capability, readiness or capability claims cannot be verified retrospectively. In regulated or safety-critical contexts, this introduces operational and legal risk.

Expiry and Revalidation

Some competencies require periodic reassessment. Measurement systems should define:

- Validity periods

- Revalidation requirements

- Triggers for reassessment

- Consequences of lapse

Ongoing capability cannot be assumed without structured re-assessment

Implications for AI

AI-generated summaries, recommendations, or classifications do not replace measurement. AI may assist in:

- Summarizing evidence

- Flagging inconsistencies

- Identifying potential gaps

AI does not determine whether sufficient evidence exists unless that requirement has been explicitly defined. If evidence thresholds are not explicitly defined, AI-generated summaries may inappropriately suggest achievement because, by its training, it leans to the positive.

Building a measurement system

Defensible measurement requires:

- Observable criteria

- Defined proficiency expectations

- Selected measurement mechanisms

- Explicit evidence requirements

- Defined approval and calibration processes

- Version control and audit logging

Measurement must be transparent, repeatable, and reviewable. Without structured measurement and evidence, ratings remain just opinion.

FAQs

What types of measurement are used in talent systems?

Common approaches include:

- Documented on job direct observation

- Structured skills demonstration

- Knowledge testing

- Capability (qualifications, training, experience review)

- Job Performance metrics

- Talent potential assessment

Different roles may require different measurement mechanisms.

How often should competency or performance be measured?

Frequency depends on risk and role criticality.

- High-risk or regulated roles may require periodic reassessment and defined recertification cycles.

- Operational roles may require onboarding assessment, role change assessments and some periodic re-assessment.

- Strategic roles may rely on milestone or outcome-based verification.

Measurement frequency should align with risk exposure and decision impact.

What affects measurement validity?

Measurement must reflect role requirements, criteria must be specific and observable, to avoid varying interpretations, measurement method must be appropriate, there should be a defined evidence requirement – record.

What is evidence in a talent management system?

Evidence is the verifiable information used to determine whether defined criteria have been met.

Examples include documented task outputs, structured observations, test results, supervisor validation, certifications, or recorded performance data.

Why are evidence requirements important?

An evidence requirement defines what constitutes sufficient proof of competence or performance. Without explicit requirements achievement is interpreted not demonstrated, and consistency is lost.

What must be recorded for auditability?

A defensible system should record:

- The criteria in effect at the time

- The measurement method used

- The evidence provided

- Who validated the outcome

- The date of assessment

Auditability depends on the integrity of historical records.